The Ultimate Toolbox for ML Success: A Comparative Analysis for Product Owners

The correlation between ML requirements and development teams, especially within cloud-based microservices environments, cannot be overstated. Microservices architecture allows for the decoupling of components, enabling the development and deployment of ML models as independent services. This modularity facilitates scalability and flexibility, but it also requires a clear definition of ML requirements to ensure that each service integrates seamlessly.

In the rapidly evolving world of technology, Machine Learning (ML) has become a cornerstone for innovation across various industries. For Product Owners, mastering the intricacies of ML is no longer an option but a necessity. Whether in manufacturing, healthcare, transportation, or retail, the ability to define and manage ML requirements is key to driving success. This blog is a reflection of my experience playing in different roles in product management, working with Data Science, Software Development, QA, DevOps, Onboarding, Technical Support and cross-functional teams, offering insights into the best tools available for defining ML requirements, the essential knowledge a Product Owner must possess, and how these tools can be effectively integrated within cloud-based microservices.

As a passionate technologist, we’ll explore the tools that can help Product Owners harness this power, analyze major market players, and delve into common challenges and their solutions. We’ll also look at the future of ML in various sectors, providing a roadmap for the next five years.

By the end of this blog, you’ll have a basic understanding of the ML landscape, equipped with initial guidelines of knowledge to drive your projects forward, and stay ahead of the curve. This basic guide is designed to empower you as a Product Owner in the ever-evolving world of Machine Learning.

To effectively manage ML projects, a Product Owner must be well-versed in the terminology that underpins the technology. Terms like "supervised learning," "unsupervised learning," "neural networks," and "data preprocessing" are foundational concepts. Supervised learning involves training a model on labeled data, allowing it to predict outcomes based on input data. In contrast, unsupervised learning identifies patterns in data without predefined labels. Understanding these terms is crucial for communicating with development teams and ensuring that ML requirements are clearly defined.

When it comes to selecting tools for defining ML requirements, the market is flooded with options. In this section, we'll compare leading ML platforms, including Databricks, Google AI, and ChatGPT, against their competitors. Each tool has its strengths and weaknesses, and the choice often depends on the specific needs of your project.

For instance, Databricks excels in providing a collaborative environment for data scientists, with robust support for big data analytics. However, its competitors like Apache Spark offer similar capabilities at a different scale and price point. Google AI, on the other hand, integrates seamlessly with Google Cloud, making it an ideal choice for organizations already invested in that ecosystem. Meanwhile, ChatGPT, and its competitors, bring powerful natural language processing (NLP) capabilities to the table, enabling more intuitive interactions with data.

Defining ML requirements is only one part of the equation. The real challenge lies in integrating these requirements within cloud-based microservices. This approach allows for scalable, modular development, where different components of the application can be developed, deployed, and scaled independently. As a Product Owner, understanding how to align ML requirements with this architecture is crucial.

For example, in a manufacturing setting, ML models might be used to predict equipment failures. These models need to be integrated into the larger microservices architecture to ensure real-time monitoring and response. The same principles apply across industries, from healthcare to retail, where ML models drive decisions in areas like patient diagnosis or inventory management.

This Pareto chart below identifies the key challenges faced by manufacturers when implementing Machine Learning. The most significant challenge is data quality, followed by integration issues and costs. The cumulative curve illustrates that addressing the top three challenges could mitigate the majority of implementation barriers. Source: Manufacturing Industry Surveys (2023).

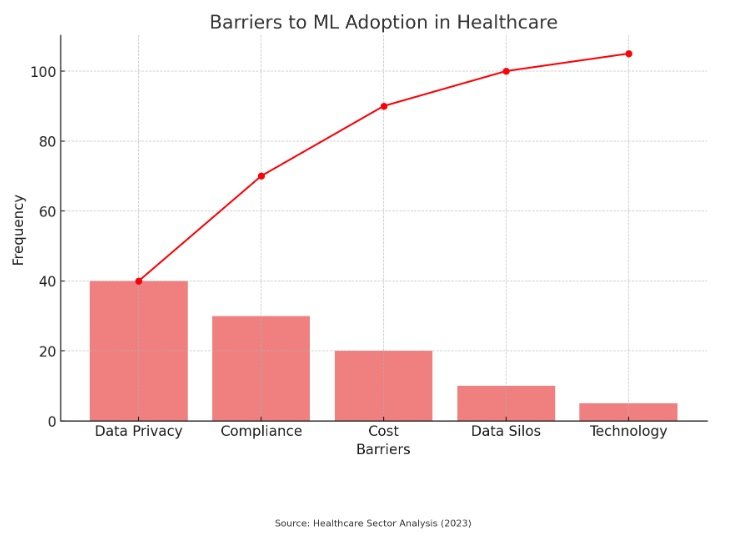

Below, this chart highlights the barriers to ML adoption in the healthcare sector, with data privacy concerns being the most prominent. Compliance with regulations and the associated costs are also significant hurdles. Understanding and addressing these barriers are crucial for the widespread adoption of ML in healthcare. Source: Healthcare Sector Analysis (2023).

The ML landscape is dominated by a few key players, each offering unique value propositions. In addition to Databricks and Google AI, platforms like Microsoft Azure ML, Amazon SageMaker, and IBM Watson are also worth considering. Microsoft Azure ML, for instance, offers a comprehensive suite of tools for building, training, and deploying ML models. Amazon SageMaker provides an end-to-end solution for ML, from data labeling to model deployment, with deep integration into AWS services. IBM Watson, known for its prowess in NLP, excels in industries like healthcare, where its models are used for everything from diagnosis to patient care.

ML is transforming industries, but it’s not without its challenges. In manufacturing, for instance, one of the primary challenges is the quality and availability of data. Without accurate and comprehensive data, ML models cannot deliver reliable predictions. In healthcare, data privacy and compliance with regulations like HIPAA present significant hurdles. Meanwhile, in transportation and logistics, the challenge lies in integrating ML models with legacy systems and ensuring real-time decision-making.

The trend chart below illustrates the increasing adoption of Machine Learning across key industries such as healthcare, manufacturing, retail, transportation, and agriculture over the years 2019 to 2024. As shown, healthcare leads the adoption curve, followed by retail and manufacturing. The steady growth in these sectors highlights the expanding role of ML in driving industry-specific innovations. Source: Industry Analysis Reports (2019-2024).

These challenges have been addressed through a combination of technological innovation and strategic planning. In manufacturing, companies are investing in IoT devices to gather real-time data, which is then used to train ML models. In healthcare, advancements in federated learning are enabling the development of ML models without compromising patient privacy. In transportation and logistics, the adoption of edge computing is helping to bring ML models closer to the data source, reducing latency and improving decision-making.

The next five years will see significant advancements in ML, particularly in areas like explainable AI, which aims to make ML models more transparent and understandable. As the technology matures, we can expect to see wider adoption across industries, with ML becoming a standard component of business operations. However, this growth will also bring new challenges, particularly in terms of ethics and governance.

For Product Owners, staying ahead of these trends will be crucial. This means not only keeping up with the latest tools and technologies but also understanding the broader implications of ML on business and society.

When it comes to supporting ML development, choosing the right platform is critical. In this section, we'll compare platforms like Databricks, Google AI, and ChatGPT with their competitors, analyzing their advantages and disadvantages.

Databricks, for instance, offers unparalleled scalability and support for big data analytics, making it an ideal choice for large-scale projects. However, its complexity may be a barrier for smaller teams. Google AI, with its deep integration into Google Cloud, offers a more accessible solution, particularly for organizations already invested in the Google ecosystem. ChatGPT, and similar NLP tools, offer a unique advantage in scenarios where natural language processing is a priority, though they may lack the depth of more specialized ML platforms.

As a Product Owner, understanding when to use ML, AI, or GEN AI is crucial. ML is best suited for tasks that involve pattern recognition and prediction, such as fraud detection or customer segmentation. AI, which encompasses a broader range of technologies, is ideal for more complex tasks that require reasoning and decision-making. GEN AI, the latest iteration, is designed for scenarios where creativity and problem-solving are key, such as generating new content or designing products.

Each of these technologies requires a different skill set and approach, and knowing when to use each one is key to success.

To excel as an ML Product Owner, continuous learning is essential. Certifications like AWS Certified Machine Learning, Google Professional Machine Learning Engineer, and Microsoft Certified: Azure AI Engineer Associate can provide a solid foundation. Additionally, online platforms like Coursera, Udacity, and edX offer specialized courses that can help you stay ahead of the curve.

For those looking to deepen their knowledge, attending conferences and networking with industry experts can also provide valuable insights. As someone who has navigated the complexities of both electrical and software engineering, I can attest to the importance of staying curious and always seeking new challenges.

As we look to the future, the role of the Product Owner in ML projects will only become more critical. By understanding the tools available, mastering the necessary terminology, and staying ahead of industry trends, you can drive your projects to success. Whether you're working in manufacturing, healthcare, transportation, or retail, the principles outlined in this blog will serve as a guide to navigating the complex world of ML.

In closing, I encourage you to explore the resources and tools mentioned in this blog, and to continuously seek out new opportunities for growth and learning. The road ahead is filled with challenges, but with the right approach, the possibilities are endless.

If you found this blog insightful and valuable, I'd love to hear your thoughts! Please share this article on your social networks, leave a comment below with your feedback, or reach out to me directly through LinkedIn or my personal blog. Whether you have questions, suggestions, or just want to discuss how we can leverage ML tools for your next project, let's keep the conversation going. Don't forget to click 'like' if you enjoyed the read, and consider subscribing for more in-depth analyses and practical tips on mastering the latest technologies. Your engagement helps us all grow and learn together!

Kirk, Michael. "Data Science from Scratch: First Principles with Python." O'Reilly Media, 2019. "Cloud Native DevOps with Kubernetes" by Justin Garrison and Kris Nova. "Deep Learning with Python" by Francois Chollet. "Architecting Machine Learning Solutions" by Marc Peter Deisenroth, A. Aldo Faisal, and Cheng Soon Ong. "Artificial Intelligence: A Guide for Thinking Humans" by Melanie Mitchell. Gartner Reports on Machine Learning Platforms (2023) "The AI Ladder: Demystifying AI Challenges and Demystifying the AI Journey" by Rob Thomas and Paul Zikopoulos. Gartner: Market trends and analysis on ML tools. Forrester: Reports on AI and ML adoption in various industries. McKinsey: Insights on the impact of AI and ML in manufacturing. Deloitte: Research on the future of ML in healthcare. AWS Documentation: Technical guidelines for using AWS in ML projects. Google Cloud Documentation: Best practices for ML on Google Cloud. Coursera: Courses on AI and ML fundamentals. edX: Educational resources for Product Owners in technology. Udacity: Nanodegree programs in AI and ML. Industry Case Studies: Examples of successful ML implementations.

What's Your Reaction?